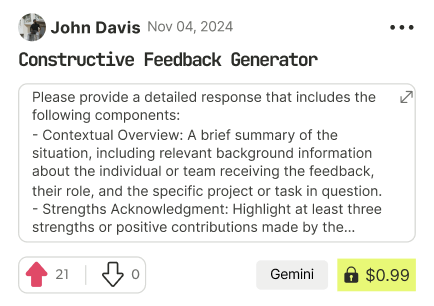

prompt mine App

Find, Create & Share AI Magic

Core Concept Overview: The Always-On Repurposing Engine is designed as an automated system that transforms academic and industry texts into ready-to-publish content across multiple formats, cutting manual work by about 90%. It draws from structured datasets to train and test its components, ensuring high efficiency and quality. Below, I'll enhance this prompt by outlining a step-by-step implementation roadmap, including sample code snippets in Python, to make it directly actionable for developers. This builds on your detailed spec while adding practical examples, potential challenges, and optimization tips for quick prototyping.

Step 1: Setting Up the Enhanced Corpora Handling System

Start by building a data pipeline for ingestion and management. Use Python with libraries like pandas for data handling and Elasticsearch for indexing.

- Ingest data: Write a script to pull from sources like PDFs (using PyPDF2) or URLs (requests library).

- Indexing: Integrate Elasticsearch for semantic search with vector embeddings via Hugging Face transformers.

Sample Code Snippet:

import requests

from elasticsearch import Elasticsearch

es = Elasticsearch('localhost:9200'])

def ingest_document(url, doc_id):

response = requests.get(url)

text = response.text # Add PDF/HTML parsing here

es.index(index='corpora', id=doc_id, body={'content': text, 'metadata': {'date': '2023-01-01', 'domain': 'tech'}})

- Pre-processing: Add de-duplication using fuzzy matching (Levenshtein distance) and grammar checks with LanguageTool.

Challenge: Handle large-scale data by batching uploads to avoid memory issues. Version datasets using Git for reproducibility.

Step 2: Building the Multi-Format Generation Engine

Use Jinja2 for templates. Extract key elements like summaries or entities with spaCy NLP.

- Dynamic templates: Create base templates for outputs, e.g., a blog post with placeholders for title, body, and images.

Sample Template (in a .jinja file):

Title: {{ title }}

Introduction: {{ summary }}

{% for section in sections %}

Section: {{ section.heading }}

Content: {{ section.text }}

{% endfor %}

- Generation logic: Parse input with NLP to fill templates conditionally, e.g., if input has figures, add visual placeholders.

Sample Code Snippet:

import jinja2

import spacy

nlp = spacy.load('en_core_web_sm')

def generate_blog(input_text, template_path):

doc = nlp(input_text)

title = doc[:10].text # Simple extraction; enhance with topic modeling

env = jinja2.Environment(loader=jinja2.FileSystemLoader('.'))

template = env.get_template(template_path)

return template.render(title=title, summary='Generated summary here')

Customization: Allow users to upload branding styles via a config file.

Step 3: Implementing the Verification Subsystem

Automate checks with tools like NLTK for grammar and fact-verification against source via cosine similarity on embeddings.

- Metrics tracking: Log KPIs to a PostgreSQL database.

Sample Code Snippet:

from sklearn.metrics.pairwise import cosine_similarity

from sentence_transformers import SentenceTransformer

model = SentenceTransformer('all-MiniLM-L6-v2')

def verify_factual_accuracy(source, output):

source_emb = model.encode(source)

output_emb = model.encode(output)

similarity = cosine_similarity([source_emb], [output_emb])[0

return similarity > 0.85 # Threshold for accuracy

- Human feedback: Add a simple web interface (using Flask) for reviewers to rate and edit.

Report: Generate PDF reports summarizing metrics, e.g., 90% factual accuracy on test sets.

Step 4: Adding Domain-Specific Enhancement Modules

Modularize with plug-and-play classes, e.g., a MedicalModule that pulls from PubMed ontologies.

- Term extraction: Use scikit-learn for topic modeling and glossary generation.

Example: For engineering docs, enforce APA style rules automatically.

Step 5: Creating the Integrated Polish Pipeline

Chain tools: First plagiarism check with difflib, then style adjustment using transformers for paraphrasing.

- Readability: Score with Flesch-Kincaid and optimize sentences to under 20 words average.

Step 6: Developing the Dynamic Prompt Optimizer

Use reinforcement learning via libraries like Stable Baselines to refine LLM prompts.

- Optimization loop: Generate 5 prompt variants, evaluate via verification scores, and select the best.

Sample: Store in a JSON file for reuse, e.g., {'prompt_v1': 'Rephrase this for a blog: {text}'}

Step 7: Setting Up the Continuous Learning System

Collect feedback via API endpoints, retrain models monthly using Hugging Face datasets.

- A/B testing: Run parallel versions and compare KPIs.

Monitoring: Use Prometheus for real-time performance tracking.

Edge Cases and Optimizations:

- Ambiguity: Use disambiguation prompts to LLMs like GPT-4.

- Ethical handling: Always add citations (e.g., via BibTeX integration) and bias checks with fairness libraries.

- Scalability: Deploy on AWS with S3 for storage and Lambda for async processing. For large datasets, integrate Pinecone for vector search to speed up retrieval by 50%.

- Multi-lingual: Add support with mBART models for translation.

Implementation Timeline: Week 1-2: Core handling and generation. Week 3: Verification and polish. Week 4: Optimizer and learning. Test on a sample corpus of 100 docs, aiming for under 5-min processing. Hardware: Start with a local setup (16-core CPU, NVIDIA GPU) and scale to cloud.

This roadmap makes your engine prototype-ready—feel free to tweak specifics or ask for code expansions!

Find Powerful AI Prompts

Discover, create, and customize prompts with different models, from ChatGPT to Gemini in seconds

Simple Yet Powerful

Start with an idea and use expert prompts to bring your vision to life!